Introduction

In the ocean of business data, approximately 2.5 quintillion bytes are created each day, that’s like filling the Library of Congress 500 times over with fresh information. The standard data warehousing methods are like trying to navigate this ocean in a rickety sailboat. In contrast, the Data Vault solution is like a highly sophisticated submarine, equipped with all the tools to dive into these depths and excavate valuables.

It is a specific approach to designing enterprise data warehouses. It handles high volumes of data and adapts smoothly to change. Designed for the agile enterprise, it hinges on three foundational principles: flexibility, scalability, and resilience. Data Vault makes this possible by leveraging a modular structure that easily accommodates changes without disrupting the existing data ecosystem.

Let’s demystify this unique warehousing solution.

Unveiling the Power of the Data Vault Solution

Incorporating Data Vault in your Data Warehousing can unlock increased data integration and quality consistency.

- Detailed steps on how to implement Data Vault 2.0.

- Real-world case study of a successful Data Vault implementation.

Let’s shed some light on some steps to implement the Data Vault method.

Step-by-step Guide to Implementing Data Vault

Data Vault effectively handles the ever-changing business dynamics. Let’s break down the process into manageable steps:

Step 1: Understanding the Basics of the Data Vault Approach

Data Vault 2.0 is an evolution of the Data Vault architecture, emphasizing agility, flexibility, and scalability in data warehousing. It’s a holistic approach that encompasses not just the storage of data but also the methodologies and processes around it. This advanced version of Data Vault incorporates new best practices and hash keys for improved performance and scalability. It is designed to be database-agnostic, meaning it can be implemented on any platform that supports the best practices of typical Data Vault data flow, including ELT (Extract, Load, Transform) patterns and the usage of hashing functions for data deduplication and consistency.

The three main pillars of Data Vault 2.0—Methodology, Architecture, and Model—interconnected to form a comprehensive system.

- The Methodology

Data Vault 2.0 methodology is a refined iteration of the original Data Vault system, incorporating best practices and methodologies for a modern data warehouse. It is consistent and repeatable, ensuring a reliable and pattern-based approach to data storage and retrieval. This methodology is not static; it was developed by Dan Linstedt and Hans Hultgren in 1999 and has evolved. The introduction of hash keys for increased performance and scalability is a hallmark of Data Vault 2.0, addressing the ever-growing data volumes and complex analytical needs of today’s businesses.

The Architecture

The Model

Lastly, the Model is flexible and scalable, centered around the ‘hub and spoke’ design, which is the essence of the Data Vault structure. It facilitates the management and retrieval of data in an efficient and orderly manner.

Step 2: Identifying Key Components of Data Vault

The three primary structures of the Data Vault 2.0 model are Hubs, Links, and Satellites. Hubs are the key reference points storing unique business keys, ensuring data is indexed and can be accessed efficiently. Links are the connectors that establish relationships between these Hubs and can represent events or transactions, providing context and structure to the data. Satellites surround both Hubs and Links, adding descriptive details, tracking changes over time, and allowing for a rich historical context. Understanding these components can result in strategic placing of data enhancing the integration and scaling of data.

Step 3: Implementing Data Vault in Your Data Warehouse

Begin by identifying the business keys in your data – these will form your Hubs. Next, establish your Links by mapping out the relationships between these keys, ensuring that no treasure is left isolated. Finally, enrich your map with Satellites, detailing the attributes that give life and context to your data. Remember, the focus here should be on the integration of disparate data sources rather than transforming data to fit a predefined model. This results in a flexible, adaptable system. This phased approach ensures that your data warehouse evolves into a well-organized repository of information, capable of navigating the turbulent seas of business change.

The system’s design facilitates the management of vast data sets while maintaining integrity, auditability, and adaptability, making it an excellent choice for organizations that anticipate changes in data structure or require rigorous historical tracking. Next, we dive straight into concrete examples of how businesses benefited from the Data Vault approach.

Real Case Study: Successful Implementations of Data Vault

How New York University (NYU) Improved Data Integration with the Data Vault Method

Integrating diverse data sources can be a challenge. NYU reaped success by implementing Data Vault, resulting in improved integration efficiency. The key to their triumph? Hubs, Links and Satellites. Data Vault changed the cumbersome ETL process for them, handling volume, variety, and velocity smoothly. For an in-depth look at NYU’s success with our Data Vault implementation, refer to the full case study here.

Now you have a good understanding of the Data Vault approach and an example of its successful implementation. You’re all set to unlock new capabilities in your own data warehouses. But remember, every organization’s data needs are unique and one size does not fit all. Choose judiciously!

Data Vault Automation

Data Vault excels in its automation-friendly design, allowing for the creation of self-regulating, self-monitoring packages that ensure data workflows are as efficient as they are reliable. It’s not merely about the ability to generate tables or scripts. With Data Vault Automation, the data flows through the warehouse with consistency, using repeatable and reliable load patterns. This automation extends beyond routine tasks, encompassing a strategic deployment and scheduling system that transforms the way data is managed, allowing businesses to scale their data solutions with confidence.

Tools That Empower Data Vault Implementations

Numerous tools are available that offer Data Vault automation capabilities, each with its unique advantages and constraints. Below is a list of our preferred partners for effective Data Vault implementations:

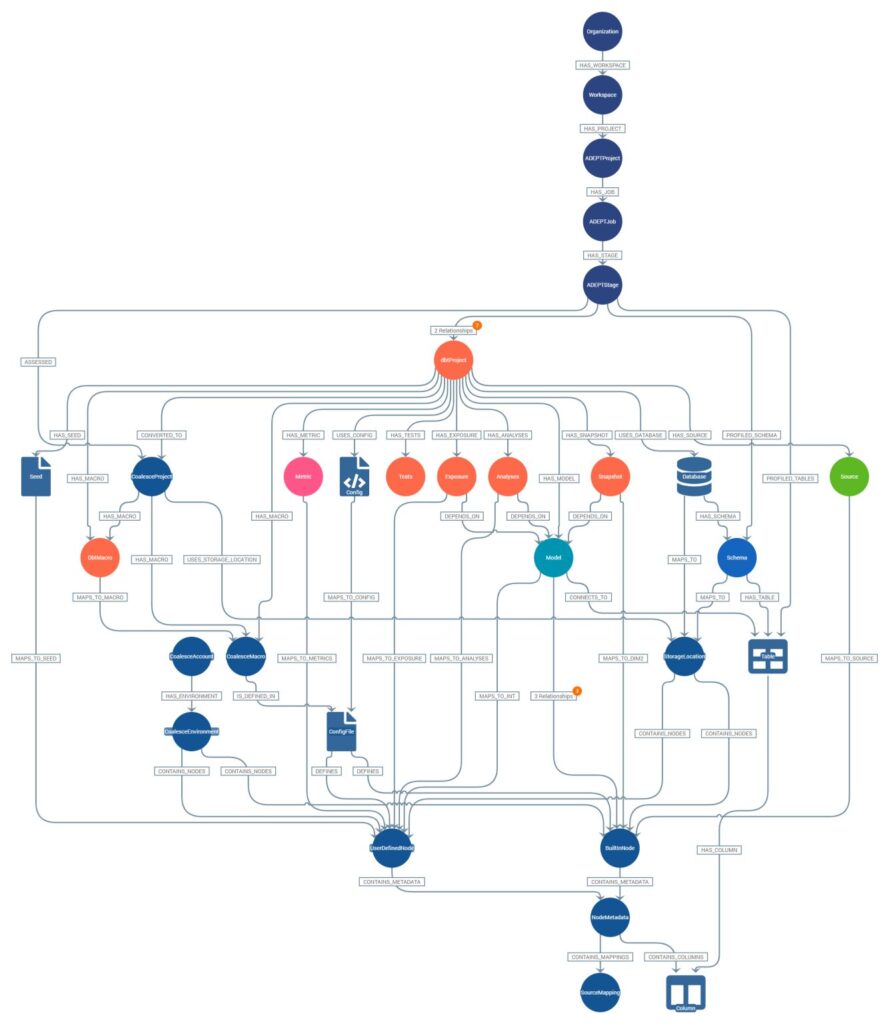

- ADEPT: This is our proprietary tool, renowned for its versatility in data migration, ADEPT shines across various platforms, adapting to your needs with remarkable flexibility.

- Vaultspeed: Tailored for Data Vault acceleration, Vaultspeed integrates seamlessly with multiple ETL tools, propelling your data strategy forward with agility.

- erwin by Quest: Positioned as an all-encompassing enterprise solution, erwin by Quest delivers robust data modeling, a comprehensive data catalog, and a universal automation framework.

- Wherescape: Wherescape provides a solid foundation for Oracle, SQL Server, and Snowflake environments.

AutomateDV: Integrating with dbt, AutomateDV offers an exceptional tool for Data Vault Automation, setting the stage for efficiency and sophistication in your data management.

Enhancing Your Data Warehouse with Data Vault

- The essential role of Data Vault in tackling big data

- Overcoming data integration hurdles using Data Vault

- Ensuring data quality and consistency with the help of Data Vault

The Role of Data Vault in Data Warehousing

The Data Vault approach is a critical component in navigating the expanse of big data. This approach offers much-needed sanity in data management. Key to this is consistency, clarity, and an assurance that the data output is reliable for accurate decision-making.

The necessity for Data Vault becomes more evident in dealing with voluminous amounts of data. Previously, this setup would be a logistical nightmare with traditional data warehousing methods. However, Data Vault curtails this concern and delivers organized, manageable, and efficient data management.

Constant and reliable data is non-negotiable in our present data-driven world. Data Vault secures consistent data across the board, eliminating variances that could lead to misinformed conclusions and decisions.

Overcoming Data Warehousing Challenges with Data Vault

The integration of diverse data sets is a common trouble area in data warehousing. However, the implementation of Data Vault can greatly alleviate these pains.

Data Vault exhibits a unique architecture, designed to absorb data from multiple sources with varied formats, without compromising the quality and structure of the data. This advantage significantly eases the integration process, ensuring a seamless flow of information and data across different platforms and sources.

Quality and consistency are non-negotiable in data handling and warehousing. Data Vault comes into play to create an environment that prioritizes data quality and consistency. By establishing a mechanism that reduces errors and inconsistencies, it ensures a high standard of data, indispensable in today’s data-driven businesses.

Diving Deeper into Data Vault

- Key Concepts in Data Vault

- How Data Vault has evolved over time

- Comparative analysis with other warehousing techniques.

- Addressing common inquiries

- Resources for detailed learning

Understanding the Core Concepts of Data Vault

The world of the Data Vault method revolves around three principal components: The Hub, Link, and Satellite. This triple-tiered structure is the heart of the model.

The Hub represents the business concept. It’s where unique business keys reside. A consistent feature of the Data Vault Model, Hubs remain static in a dynamic business environment. They are the patient bearers of business data in a Data Vault diagram.

Links act as the glue. They seamlessly bind business keys from various Hubs, facilitating many-to-many relationships. Every link represents an explicit business relationship, making the model highly relational.

The last but equally crucial component, Satellites, house descriptive data about business keys (Hubs) or their relationships (Links). In essence, they are the history keepers in a Data Vault Model.

The Evolution of the Data Vault Method

Data Vault is not a new kid on the block. Its roots go back to the late 20th century, and the model’s growth has been noteworthy. Envisioned to cater to a broader array of informational challenges, Data Vault has grown with the rising wave of big data and AI. How the model will further evolve in this era remains an attractive realm of exploration.

Comparing Data Vault with Other Data Warehousing Techniques

Every data warehousing technique comes with its own strengths and weaknesses – Data Vault is no exception. A comparative analysis with popular models like Star Schema and Snowflake Schema will give a comprehensive view of where it stands. This comparison might be an essential factor for decision-makers considering Data Vault for their data warehouse.

| Feature / Technique | Data Vault | Star Schema | Snowflake Schema |

|---|---|---|---|

| Key Concepts | Hubs (business keys), Links (relationships), Satellites (descriptive data) | Fact tables (quantitative data), Dimension tables (descriptive information) | Extension of Star Schema with additional normalization of dimension tables |

| Design Focus | Flexibility and scalability to handle changing data and requirements | Simplified querying and reporting for business users | Reducing data redundancy and improving query performance through normalized dimension tables |

| Pros | – Adaptable to changes – Supports historical data tracking – Complex relationship handling | – Easy to understand and use – Fast query performance – Simplified user access | – Reduced data redundancy – Flexibility and performance – Better normalization |

| Cons | – Complex to implement – Requires specialized knowledge – Potential for data redundancy | – Complexity with large datasets – Design complexity | – Potential impact on query performance |

| Best Used For | – Complex, evolving datasets – Detailed historical tracking | – Simplified reporting and analysis – Fast query needs | – Reducing redundancy in complex models – Environments requiring normalization beyond Star Schema |

| Implementation Complexity | High | Low to Medium | Medium to High |

| Cost Implications | Potentially higher due to complexity and need for specialized skills | Generally lower, given the simplicity and widespread knowledge | Can vary, potentially higher due to design and maintenance considerations |

| Performance Metrics | Optimized for flexibility and change management rather than raw query speed | Optimized for fast query performance, especially with denormalized data | Balances normalization with performance, may require optimization for complex queries |

| Tool/Platform Compatibility | Wide compatibility with modern data tools and platforms, but requires careful integration planning | Broad compatibility and support across data warehousing tools and platforms | Compatible with many tools, though normalization might require additional consideration in tool selection |

| Data Security Features | Depends on implementation; can be highly secure with proper design | Security is manageable but requires attention to access control on fact and dimension tables | Similar to Star Schema, with additional considerations due to normalization |

| Real-World Use Cases | – Large-scale enterprises with evolving data landscapes – Industries like finance, and healthcare, where data changes frequently | – Retail, e-commerce for sales and inventory reporting – Small to medium businesses with straightforward needs | – Organizations needing detailed historical analysis – Sectors with complex data models like telecommunications |

| Scalability and Flexibility | Highly scalable and flexible, designed to accommodate growth and change | Scalable with potential performance impacts in very large datasets | Scalable, with flexibility enhanced by normalization but may require careful query optimization |

| Community and Support | Growing community; resources vary by region and platform. Specialized training often required | Large, established community with extensive resources and examples | Growing community, especially among enterprises adopting modern data warehousing techniques |

Resources for Further Learning about Data Vault

For those intrigued by Data Vault, a continuously updated list of books, online courses, and forums can be a treasure trove. Hone your skills and deepen your understanding with the best resources available.

- Building a Scalable Data Warehouse with Data Vault 2.0 by Dan Linstedt and Michael Olschimke

- The Data Vault Model: A Practitioner’s Guide by Dan Linstedt

- Super Charge Your Data Warehouse: Invaluable Data Modeling Rules to Implement Your Data Vault by Dan Linstedt

- Data Vault Certification by Dan Linstedt – Official certification for mastering Data Vault techniques.

- Regular webinars by the Data Vault Alliance or certified Data Vault practitioners. (We have an upcoming webinar with Vaultspeed)

- Interactive webinars, workshops and events hosted by industry experts, which can often be found through professional data management and analytics organizations.

Frequently Asked Questions (FAQs) on the Data Vault Solution

Data Vault is a database modeling approach that provides a long-term historical storage of data coming in from multiple operational systems. It is a method of designing highly flexible, scalable, and adaptable data structures in a data warehouse.

Data Vault is unique in its use of a detail-oriented, ensemble modeling approach. It differs from other methodologies like the Kimball or Inmon approaches by focusing on the ability to absorb changes over time, track the lineage of data, and ensure data is stored without loss of information.

Data Vault 2.0 introduces enhancements like hash keys and optimized loading patterns for big data and real-time systems. It also emphasizes automation, scalability, and agile methodologies to accommodate the evolving landscape of data warehousing.

Yes, Data Vault is well-suited for big data scenarios. Its scalable architecture can handle large volumes and varieties of data, making it an ideal choice for organizations dealing with significant amounts of structured and unstructured data.

By automating repetitive tasks, products like VaultSpeed allows teams to focus on strategy and analysis rather than coding. This tool enhances the advantages of Data Vault by providing a user-friendly platform that facilitates automation and scalability, outperforming traditional manual methods.

Data Vault 2.0, in particular, supports real-time data processing by allowing for the near-real-time ingestion of data into the Data Vault, thanks to its handling of streams and its efficient change data capture mechanisms.

Data Vault ensures data quality through its design principles, which include retaining all data (even if considered erroneous) to preserve the full history. Quality checks and transformations are performed downstream, allowing for a clear separation between raw data and business-rule-applied data.

Organizations with complex and evolving data requirements, such as those in finance, healthcare, and telecommunications, can benefit greatly from Data Vault. It’s also useful for any business that requires a robust audit trail and historical analysis capabilities.

Data Vault’s modular design allows for easy adaptation to changes. New business concepts can be added as new Hubs or Satellites without disrupting the existing structure, making it highly agile in response to changing business requirements.

One of the challenges is the initial learning curve, the need for specialized skills to design and implement the architecture correctly, and the potential for increased complexity in data integration and transformation processes.

Yes, Data Vault can be implemented in both on-premises and cloud-based environments. It is compatible with various data warehousing solutions and can leverage the scalability and flexibility offered by cloud platforms.

Sealing the Data Vault: Your Move Towards Smarter Warehousing

Data Vault, with its unique approach to managing complex information, provides an impressive opportunity to redefine your data warehousing and boost your competitive edge. It has immense potential to streamline operations, improve decision-making, and scale business growth.

This model excels in its ability to handle change and minimize risk – characteristics vital to any business in the data era. Adopting a Data Vault strategy can empower your company with the agility and responsiveness it needs to thrive in a data-centric market.

Keen to get started? Let’s connect. We are trusted experts in this field. To kick off our discussion, consider this: how can the adaptive nature of Data Vault transform your existing data systems?

It’s time to vault into the future. The keys to an organized, secure, and responsive data system are within your reach.