6 Months

From conceptual data architecture to fully loaded and tested master data hub with 2 ETL developers.

339

Data Attributes

60

Base Tables and 8 Integration Views

Get Ready to Started?

Redefining Education at Yale Through Digital Transformation

Yale Overview and the Role of Data

With a long-standing reputation for academic excellence and a rich historical legacy, this esteemed university has acknowledged the pivotal role of data in effective decision-making and operational efficiency. Specifically, data is harnessed to gain valuable insights into various aspects of the institution, including student demographics, academic performance, and emerging trends. This wealth of information serves as the bedrock for informed strategic planning and the continuous enhancement of academic programs.

Furthermore, the university’s forward-thinking approach extends to preparing students for a world increasingly reliant on data. To achieve this, data science and analytics are integrated into various academic programs, equipping students with the skills needed to thrive in a data-driven environment. The university’s dedication to data extends to its sustainability initiatives, employing data-driven strategies to monitor and mitigate the university’s environmental footprint which actively promotes sustainable practices throughout its campus. This multifaceted approach showcases the university’s commitment to harnessing the power of data for both educational and ecological advancement.

Top Challenges Faced

-

Chart of Accounts Alignment (Cost Account Hierarchy)

Aligning the chart of accounts presented challenges due to the diverse accounting practices and systems that existed. It required substantial collaboration and analysis to establish a standardized framework for financial transactions. Creful consideration of various cost account hierarchies were made to ensure accurate representation of the organization’s financial structure.

-

Supervisory Organization Alignment

Achieving consistency in reporting structures was also a challenge as unique reporting relationships and departmental hierarchies needed to be navigated. It involved engagement with stakeholders to establish a cohesive and standardized reporting system across the organization, allowing for clearer communication and streamlined managerial processes.

-

Academic Structure Alignment

Harmonizing academic structures posed challenges due to variations in curriculum, programs, and the organization of academic departments across different colleges. Efforts were made to establish consistent terminology, program codes, and academic hierarchies while accommodating the unique characteristics and offerings of each college, resulting in a more streamlined and unified academic structure.

-

ABAC Security Integration

Integrating Attribute-Based Access Control (ABAC) security required overcoming challenges presented by varying security policies, roles, and access levels. The project involved aligning access control policies with the overall security requirements of the organization while accommodating the specific needs of different colleges, ensuring data privacy, compliance, and secure access control across the organization’s systems.

Strategy and Approach

1. Assessment

Through our tool, ADEPT, we began with a comprehensive assessment of the current landscape, identified assets that needed migration, and conducted an impact analysis to ensure a successful and informed modernization process.

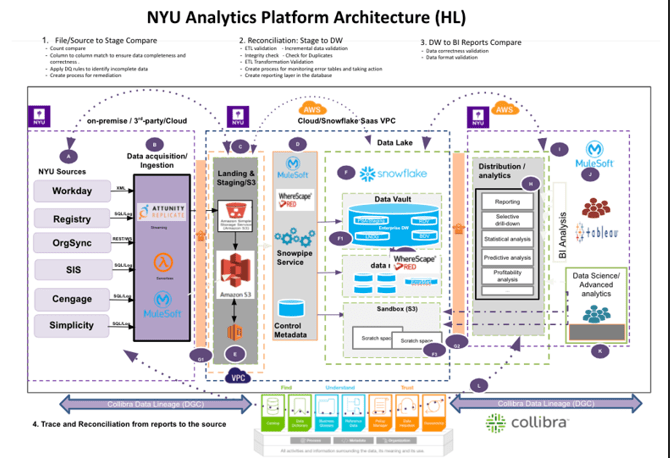

Their transition from on-premise and campus solutions to the cloud involved aligning Workday concepts. They also had to be agile in their approach during the migration process, as the project required moving over 3000 data attributes from these source systems. This needed a comprehensive understanding of the business perspective to ensure accurate data integration into the new cloud environment.

2. Utilize Automation Technology for Talend Jobs

By leveraging automation technology, we automated Talend jobs, enabling efficient and streamlined data processing. This automation eliminates manual intervention and accelerates data workflows.

3. Implement Automated Unit and Quality Tests

We built automated unit and quality tests as part of our strategy. These tests ensure the reliability and robustness of the data load process by detecting errors and verifying data integrity. Additionally, they facilitate graceful restart and recovery processes, minimizing disruptions and ensuring smooth data flow.

4. Incorporate Web Services for Seamless Integration

Through the integration of web services, we provided seamless communication and data integration capabilities. This facilitated efficient access to data services and enabled smooth interaction between different system components.

Transforming Data Into Profit

Robust Technology and Futuristic Roadmap

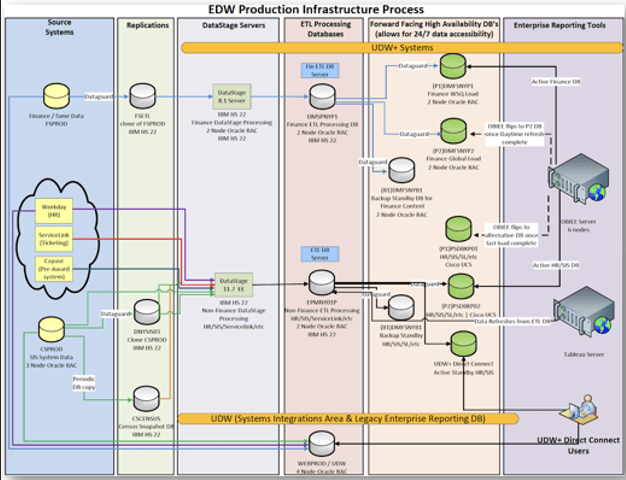

We assisted them in implementing a robust technology platform and developing a futuristic roadmap. This allowed them to stay ahead of the curve, ensuring they have a solid foundation to support their evolving business needs and leverage emerging technologies for innovation and efficiency.

Ability to Scale and Handle Changing Business Requirements

Our approach enabled them to adopt scalable solutions, facilitating their ability to accommodate changing business requirements. This scalability empowers them to expand operations, handle increased data volumes, adapt to market demands, and ensure a seamless and agile business environment.

The solution is designed to handle both daily and intraday loads into People Hub, with the capability to handle Big Data capacity. It also facilitates web service access, data service integration, and utilizes a scalable data model, enabling easy addition of new tables and columns with minimal downstream impact.

Re-usable Components and Streamlined Development

By leveraging reusable components and platforms like HCM and RE, we streamlined their development processes, resulting in significant time and effort savings. The scalable data model and easy integration allowed for seamless addition of new tables and columns with minimal downstream impact, enhancing operational efficiency.

The global big data in education market size is expected to reach $68.5 billion by 2027, reflecting the growing importance of data analytics in higher education.

Solutions

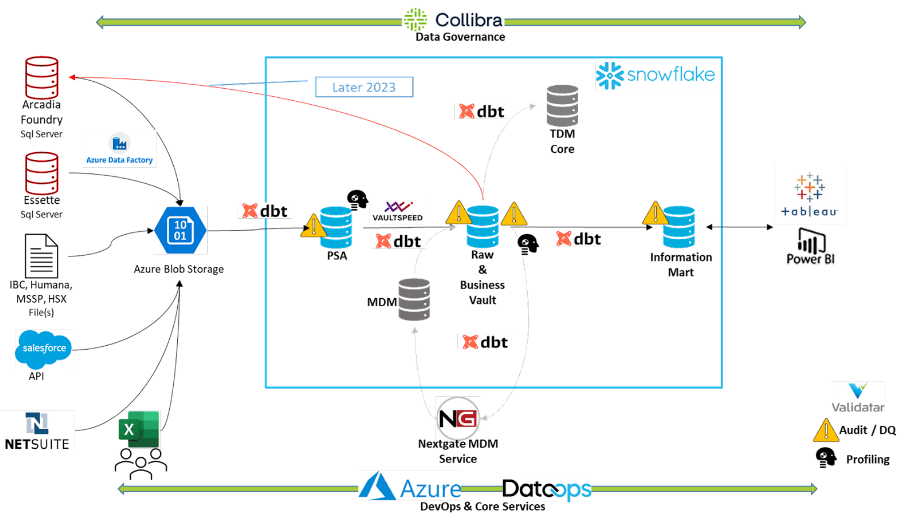

- Data Platform Modernization

- Data Warehousing

Assessment Toolkit

Tech Stack

- Talend

- Microsoft SQL Server

- Workday Report As A Service (RAAS)

- Automation

- Higher Ed

- United States

Read More Customer Stories?

Ready to Get Started?

Unlock the power of data-driven insights through tailored data solutions designed to

meet the unique needs of your organization.